This article is more than 1 year old

Amazon makes EC2 stickier with default virtual private clouds

Software stacks jump across AWS regions, but still can't live migrate

The evolution of the Amazon Web Services cloud has proceeded at a steady pace since 2006, and while a number of companies have built up cloud businesses and close the gaps, Amazon keeps moving ahead. Two recent tweaks to the Amazon cloud make it that more useful, and therefore more sticky for the applications running upon it.

What's old is new again. Amazon Web Services is creating a modern, integrated minicomputer with everything you might possibly need, only this one is shared by hundreds of thousands of customers and does things that those beloved VAXes and AS/400s couldn't even imagine.

The technology might be different, but the tactic is the same: Make it easy to use and clever, and people will pay a premium for it, write their applications on it, and find it damned near impossible to ever leave. Extract profits.

The two recent changes, announced in the Elastic Compute Cloud (EC2) release notes, allow for Amazon Machine Images (AMIs) to be copied from one AWS region to another and makes virtual private clouds the default setup for EC2 compute services.

The first update to EC2 – being able to copy an AMI across regions – might seem obvious, and you might even be thinking that this functionality must surely have already existed. But you would be wrong.

Amazon did not respond to requests for comment as El Reg went to press, but it is a fairly safe presumption that the custom Xen hypervisor used in Amazon's various global data centers is compatible across those data centers, which are clustered in what the company calls regions. So compatibility was not the issue. Bandwidth probably was.

Copying an AMI between regions is no doubt useful, and probably has been enabled through Amazon boosting the network capacity between regions in its operations and perhaps boosting the I/O performance of its Elastic Block Storage (EBS) service as well as the I/O coming off local storage on the EC2 instances. (People have been complaining about poor EBS performance for years, so this may be a sign that it is getting better.)

The AMI copies are initiated from the destination site through API calls or the AWS Management Console, and the result is not a linked clone. With linked cloning, which could be very useful indeed, changes made in one AMI running in one region would be propagated to the copy running in another zone.

The AMI copying is similarly not a live migration – you are not throwing a running EC2 instance from one region to another, but man, that would be fun to see and probably quite useful. El Reg bets that Netflix, among others, would love such a feature.

Copying AMIs across regions is a start, and doing live migrations within a region, much less across them, will take a whole lot more bandwidth and very likely substantial tweaking to Amazon's Xen hypervisor and its networks. But fear not: AWS will get there.

If you read the nitty gritty details of the AMI copying feature, any AMI type or operating system supported by AWS can be copied. The copy function is free for both Windows and Linux AMIs, but obviously once the AMI is fired up in a running EC2 instance, different per hour charges will apply if the regions have different pricing for capacity.

Incidentally, you can only copy AMI images that you own. You cannot copy any AMIs made by Amazon or partners in the AWS Marketplace. No word on how long it takes to copy an AMI from one region to the other, but obviously it will depend on the size of the image and the traffic on Amazon's network.

You might want to schedule them at times when it is neither prime time (8 PM through 11 PM) in either the source or destination region, given the load that Netflix places on AWS. (We are only half joking.)

Virtual private parts

In a separate announcement, AWS has enhanced its virtual private cloud software for EC2 and made VPC a default for new EC2 instances.

Rather than just leave this to the release notes, Jeff Barr, the evangelist at AWS, put out a blog post to explain the VPC enhancements and why this change is important. Barr also walked through a bit of history, showing how Amazon's VPC capability has evolved over time.

Amazon rolled out its initial VPC back in the summer of 2009, letting EC2 customers link virtual server images together, and isolated from the networks of other customers, that was accessible by an IPsec-encrypted virtual private network from the Internet.

In March 2011, Amazon gave customers control over many of the network settings they lord over in their own data centers: IP address ranges, subnets, and configuration of route tables and network gateways, and shortly thereafter, AWS let customers have dedicated VPC instances, which literally meant pinning specific EC2 workloads to specific servers on specific virtual and isolated networks, creating what was in effect a hosted private cloud.

The VPC offering was rolled out globally in the summer of 2011, including the AWS Direct Connect service, which is a low-latency, fat pipe into AWS data centers to link them to a company's internal data centers.

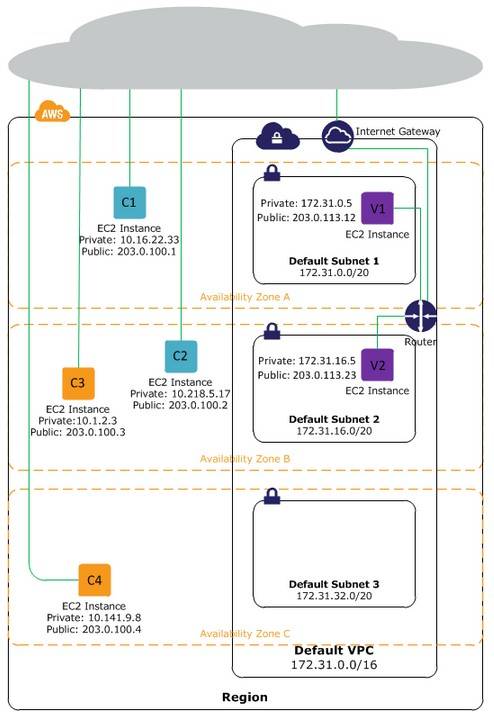

The new default virtual private cloud setup for EC2 compute clouds is called EC2-VPC, and the old way (where VPC was not set up by default but available nonetheless in a slightly different form) is being referred to as EC2-Classic. Here's how they look conceptually:

Contrasting EC2-Classic and EC2-VPC virtual private networking

To make things more complex, there are actually three different kinds of VPC. There is the EC2-Classic, the new default VPC (called EC2-VPC as above) and a non-default VPC that has some of the features of the default VPC, but is not turned on automagically when you fire up EC2 instances.

You can see the differences between these three VPCs in this section of the AWS user guide. The capabilities of the default VPC are probably what is important because this is the new normal.

With the default VPC service, EC2 instances launch in a default subnet and get a public IP address, and that EC2 slice gets a private IP address from the range of the default VPC. You can assign multiple IP addresses to an EC2 instance and an Elastic IP address, if you use that service, sticks to that instance even when you halt it.

With the EC2 cloud's classic VPC service, an instance just gets a public IP address and the private address and Elastic IP address have to be assigned each time you start up the EC2 slice. (This is annoying, and it is a wonder why it was done this way in the first place, but presumably it had something to do with cycling back unused IP addresses and the ease of programming of the VPC itself by Amazon.)

In the past, with the EC2-Classic setup, you had to terminate your instance to share a security group, you had to run on shared hardware, and you could only add security rules for inbound traffic only.

The new default VPC, like the non-default VPC that has been evolving over the past four years, lets you change security groups of running instances, lets you add rules for inbound and outbound traffic, and lets you run on shared or dedicated instances. The big change with the new default VPC is that DNS hostnames are enabled by default, which was not the case with the non-default VPC.

With the default VPC update, in fact, all EC2 instances will have a private and a public DNS hostname; any existing VPCs that you already built will have them off, but you can turn them on as you see fit. DNS resolution is enabled is turned on with the new default VPC, but you can turn off Amazon's DNS service if you want.

Amazon is also now allowing for the ElastiCache caching service to have nodes created and tied to the VPC, and Relational Database Services (RDS) instances can be provisioned to either face the Internet (so they can be accessed from external data center or EC2-Classic networks) or face the instances on a shiny new VPC. Any shell scripts you have created, CloudFormation templates, or Elastic Beanstalk applications will work with the EC2-VPC default virty private fluff.

You network gurus will no doubt find a lot more interesting in these developments than an infrastructure hack, but here is the important thing. Just like developers don't want to be using up valuable time with server infrastructure, they don't want to be wasting time with networking.

Anything that Amazon does to make it easy to stand up EC2 instances and its various platform services and glue it together into a system makes AWS that much more useful to companies that want to focus on applications, not on infrastructure.

Now you don't have to create the VPC linking your stuff. Amazon will do it. And presumably, given its expertise, it can optimize its networks and make them perform better, but this may not be about that. Yet.

AWS is taking it slow rolling out this new EC2-VPC default virtual private network, and Barr says that new EC2 customers – and only new customers – in its Asia/Pacific region in Sydney, Australia and its South American region in São Paulo, Brazil will have this new VPC enabled. Barr said he will update his post each time a new region gets the EC2-VPC default setting. ®